The Dependent Arising of Meaning and the Training of Language Models

The following brief essay explaining the training of language models was extracted from a letter in my correspondence and addressed to a Zen priest. It is meant for a non-technical audience.

Greetings, and thank you for sharing your thoughts.

These models, and the encoders and decoders that form them, are formed in a process called training. During training, pages or paragraphs drawn from the internet and from books are presented to the models, and they are made to predict what words arise from them. A demonstration perhaps would be the best way to illustrate this.

During training, I would present the model with some page, paragraph or sentence such as the following: 'Love is heavy and light, bright and dark, hot and cold, sick and [blank]' Then, I would ask the model to predict what word should fill the blank.

A totally untrained model will fill the blank with random characters: 'Love is heavy and light, bright and dark, hot and cold, sick and zudbnv.'

Since the guess is incorrect, the training process penalises the model, so that the next time it makes a guess with words that resemble this sentence, it knows that "zudbnv" is an incorrect guess.

A slightly trained model might fill the blank with some coherent word: 'Love is heavy and light, bright and dark, hot and cold, sick and weary.'

This is not an unreasonable guess, since people often say the phrase "sick and weary", but in this case, the model has not learnt to look at the rest of the sentence more closely, to understand that the relation of the [blank] word to sick should mirror the relation of "hot and cold", "bright and dark".

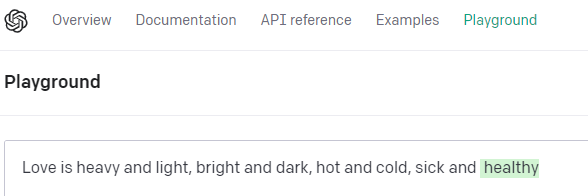

Finally, a well-trained model will understand the context and form of the whole sentence, and fill the [blank] word correctly. I have taken the liberty to test davinci-3 on this particular sentence:

The large language models are shown 1 to 2 trillion words, split into pages of anywhere from 1 to 2000 words long, and over and over again during this training process they have to make best guesses at how to complete these pages, and are admonished if they make incorrect or unsuitable guesses.

These models learn to predict what the next most suitable word is by learning the relationship between words when shown together in a sentence, in a paragraph, on a page. Ultimately, these models are a collection of numerical weights, where the weights denote how "relevant" a combination of words are to some other combination of words.

After looking at 1 to 2 trillion words, and the relationship that words have to each other, a picture of the world emerges encoded in these numbers of how "relevant" words are to other words. If you will indulge my Mahayana upbringing a little, these models are a construction of the dependent arising of meaning in words, and the relationship of meaning between words.

The wonderful thing is that learning the structure of language and the relationship between words is enough to create entities that are so apparently intelligent, and that have been a great boon to me in my work writing computer code, and helping me understand difficult essays and documents. I find the Two Truths perspective of Madhyamaka Buddhists expedient here, for just as we humans use our words and books to navigate our conventionally existent (samvriti-satya) world, we should not be surprised that a dependently-originated model that is trained over our word-constructions would be able to navigate this conventionally-existent world as well.

Yours sincerely,

Bryan Cheong